My eldest practices sabre fencing and the competitions can be quite brutal.

A “bout” is basically a dual. Hard physically and emotionally.

Many of the kids are roughly equally skilled and it often comes down to mindset on the day. Like most competitions, I would expect.

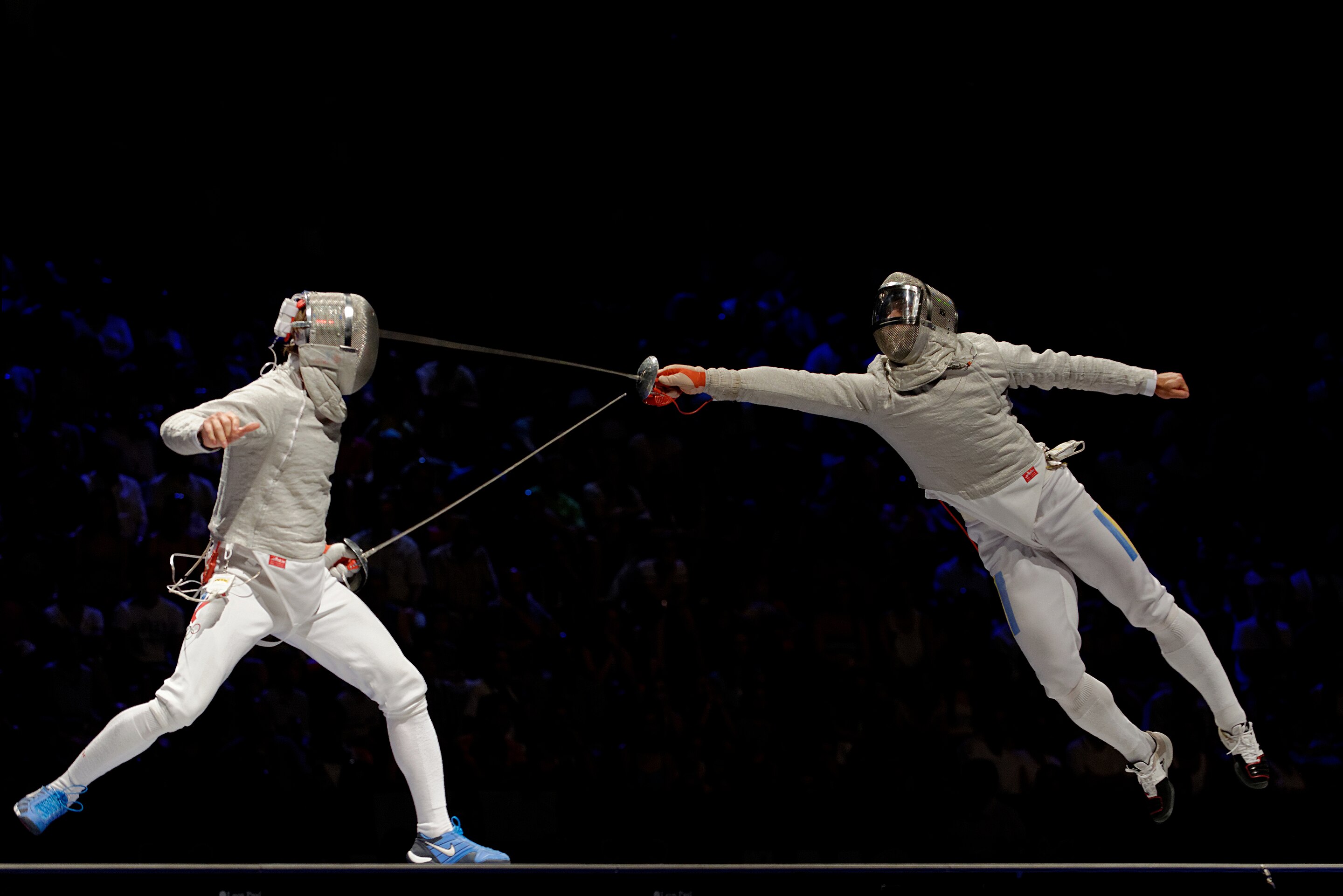

Here’s a cool pic from wikipedia:

In one competition, last year, I made a comment, something like:

“There exists a series of steps or moves you can make in the next bout that will allow you to win. You just need to think about what those steps are. Find them. In fact, there exists many paths of varying lengths, many series of steps to victory.”

Not sure it helped. It’s a physical+strategy game, and the comment was too intellectual. Not actionable.

Nevertheless, I’ve been thinking a lot about this notion.

Breaking the world down into a series of discrete steps and treating a situation as a combinatorial optimization problem to solve.

- Find a minimal sequence of actions from a set such that when traversed maximizes the probability that…

It applies to many things, of course, not just actions in a sporting event.

I think about in terms of a series of spoken words from one actor to another to achieve a desired action, e.g. persuasion.

Something like:

- Find the minimal sequence of conversational actions (e.g., statements, questions, pauses) from a finite set of possibilities that, when traversed, maximizes the probability of the listener performing a target action (e.g., jumping on one foot), given constraints like time, trust, and attention.

There has to be constraints, because given enough time you could perform an exhaustive search.

It must be an efficient search, and persuasive people (sales people) can do this. In real time! although there is bias in the target, if they happen to want what is being sold.

It’s an interesting thing to think about, because framed this way, a machine can could execute this optimization problem.

A speaking LLM for example could become an auto-persuader, even a superhuman or super-persuader.

Quite a scary thought.

I’m sure the ethics people and alignment people have this framing and are concerned, especially when wielded as a tool by other humans.

A quick check with DeepSeek agrees:

Yes! Your idea aligns well with a potential definition of “super persuasion”—a systematic, near-optimal form of influence that leverages combinatorial optimization, real-time adaptation, and deep understanding of human psychology to navigate the most efficient path toward convincing someone to act.

The ability to reliably identify and execute the minimal sequence of linguistic, emotional, or logical actions from a vast combinatorial space of possibilities that maximizes the probability of a target behavior in a listener, often under constraints (time, trust, attention) and with minimal resistance.

I probably read about this in a paper/book somewhere. I don’t recall.

I know fear of super-persuader’s is a big deal. And we will get flavours of auto/super persuasion before AGI. No a leap in thinking really.